What I mean when I write about ELSA

There’s a concept called “ELSA” that I talk about a lot. As you might guess, ELSA is an acronym. It stands for “Ethical, Legal, and Social Aspects.” Used in the study of emerging scientific and technological developments, it brings people with expertise in ELS aspects to the table.

There’s a concept called “ELSA” that I talk about a lot. Because it will inevitably come up pretty frequently in the small essays I’m posting here, it deserves a stand-alone explanation.

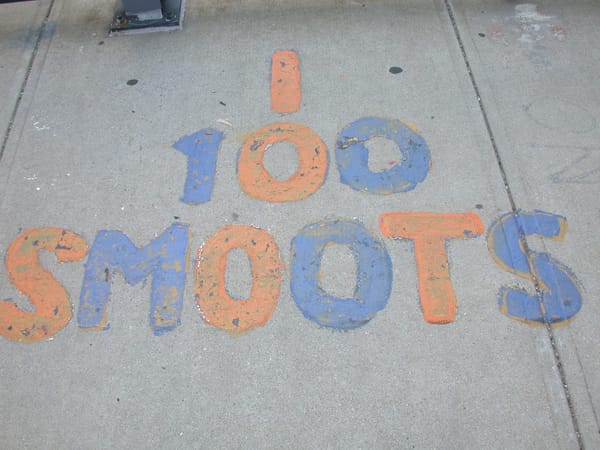

As you might guess, ELSA is an acronym. It stands for “Ethical, Legal, and Social Aspects.” It has a 30+ year history, coming out of a concept called ELSI (Ethical, Legal, and Social Implications) developed at the very end of the 1980s and popularized in the 1990s. But I’ll cover the history another time. What matters now is what ELSA is about, and how it’s used.

ELSA is used in the study of emerging scientific and technological developments. Alongside those scientific and technological processes, ELSA is meant to bring people with expertise in its aspects (Ethical, Legal, and Social) in at an early stage. Instead of having, for example, an ethicist taking potshots at a technology from the outside, ELSA brings the ethicist into the research to, ideally, be in dialog with the people working on whatever scientific or technological development is in question, using their presence in the project to better understand it, and to identify potential ethical issues. You could say that one of the things ELSA is meant to do is raise concerns at an earlier stage, in the service of highlighting negative possible outcomes, or improving the way decisions are made and policy is developed.

Why ELSA?

One of the major motivations for this kind of work is the recognition that a lot of scientific and technological development in the 20th century didn’t take broader concerns into account. The innovation galloped ahead, while society more broadly (including law) was left to figure out how to live with the consequences. The examples of this are too numerous to detail, but we’re all living through the fallout. Despite a fairly widespread understanding that implementing things without thinking of the consequences might be a bad idea, we still hear a version of this in the tech sector. “Move fast and break things” is a credo that still gets followed, and it goes alongside the idea that technological development will always move faster than the laws meant to govern it. One of the propositions of ELSA is that things don’t need to happen that way.

While the terms ELSA and ELSI originally came into use alongside human genomics, the remit of ELSA has expanded. In the work I do, we use ELSA as a framework for engaging with the development and implementation of AI (whatever AI may happen to mean – but more on that in another post). In the best case scenario, this means that researchers from discipines including philosophy, sociology, and law, work alongside business, government, and also regular members of society, to highlight potential problems and opportunities in AI development and implementation.

How ELSA?

How does that happen? ELSA doesn’t have one specific method. There are lots of different strategies and tools, used by different ELSA researchers. It’s interdisciplinary, so it relies on some amount of collaboration between people working in different fields (that, in itself, is a big task). The amount of cross-field collaboration also varies, depending on the set-up of a given project, and the desires and commitments of the people working on that project. At its best, I’d say that ELSA thrives on bringing the knowledge and standpoints of researchers from different fields together around one area of concern, and looking at that concern from different angles. Whether that’s human genomics or AI, the value of ELSA lies in structurally including researchers who care about not just the legal issues, not just the ethical issues, not just the social issues, but all of them. Those different disciplines then bring their own tools and methods to the table, and hopefully also learn something from each other along the way.

Does it work? I’d say “kind of.” The original ELSI concept came out of the Human Genome Project, meaning it was sitting alongside the biggest development happening at that time in human genomics. Being on the inside of a project that important would mean, you might assume, good access to the world-changing developments being made. There’s been a lot of work over the last 30 years about whether or not ELSI in the Human Genome Project has been successful at doing what it was supposed to do. I won’t try to hash that one out, but I’ll optimistically argue that bothering to include the ELS disciplines at all in the early stages of new scientific developments is an important step, in and of itself. It beats the galloping ahead and waiting for society to catch up approach I mentioned earlier.

In the kind of ELSA I work on, we’re also invested in getting people from different parts of society around the table. What that can mean in practice is trying to get people with different stakes in a technology to talk to each other in a way that’s comprehensible for everyone – not always easy, but very worthwhile. I can say that in the current AI boom, one thing is more difficult, and that’s getting the big players meaningfully at the table. While the original ELSI work existed because it was baked into the Human Genome Project, giving access to the science as it was happening, a challenge now is finding meaningful points of entry into especially technological developments that have the potential to change people’s lives for the worse. On the one hand, what this shows is that innovation can and does still suffer from its 20th century God complex, racing ahead and leaving others to pick up the pieces. On the other hand, there’s something hopeful in there for ELSA as a way of studying and intervening in scientific and technological developments. Fragmentation in our sites of study (not just attached to one big project) means that ELSA researchers have more opportunities to go looking for the places where a change is possible, and where there’s a desire to think about consequences. The need for ELSA to be embedded in the developments it studies means that we’re not just screaming into the void, we’re talking to the people making the decisions and sometimes even helping them make more nuanced choices. It’s a start.